Robust Loss Functions for Least Square Optimization

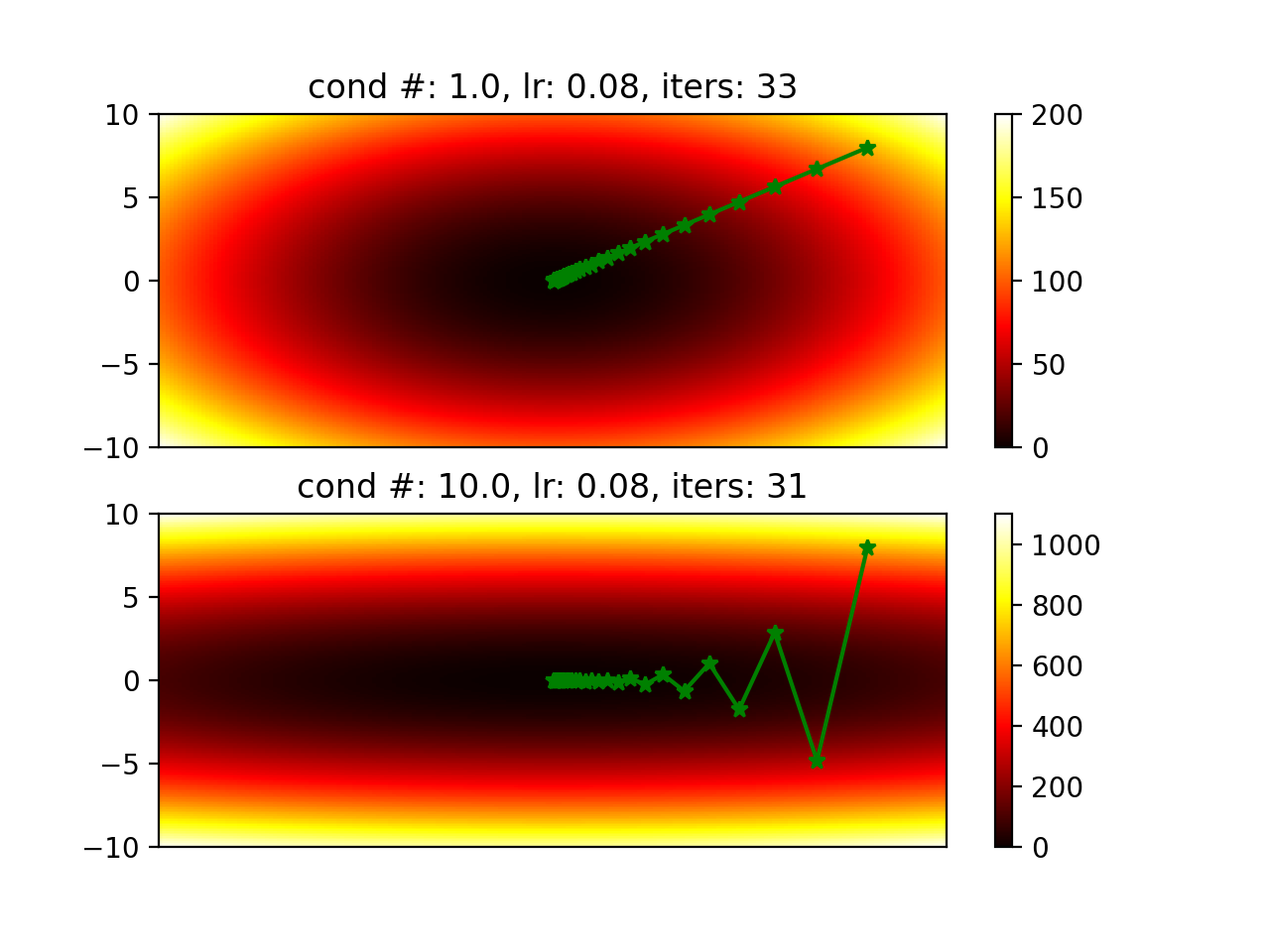

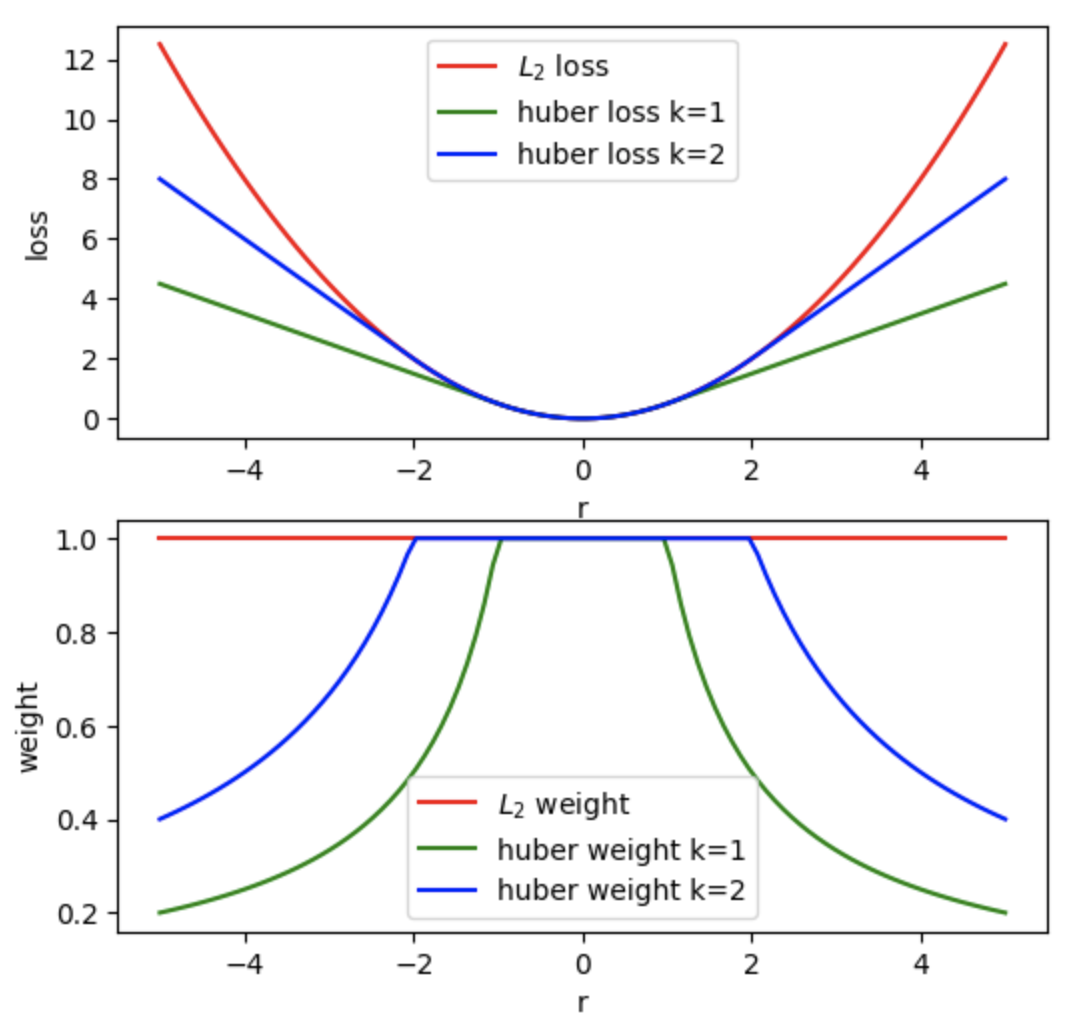

In most robotics applications, maximizing posterior distribution is converted into a least square problem under the assumption that residuals follow Gaussian distribution. However, errors are not Gaussian distributed in practice. This makes the naive least square formulation not very robust to outliers with large residuals. In this post, we will first explore robust kernel approachs, then try to model residuals using Gaussian mixtures.

Lie Theory for State Estimation in Robotics

A tutorial for Lie theory used in state estimation of robotics.

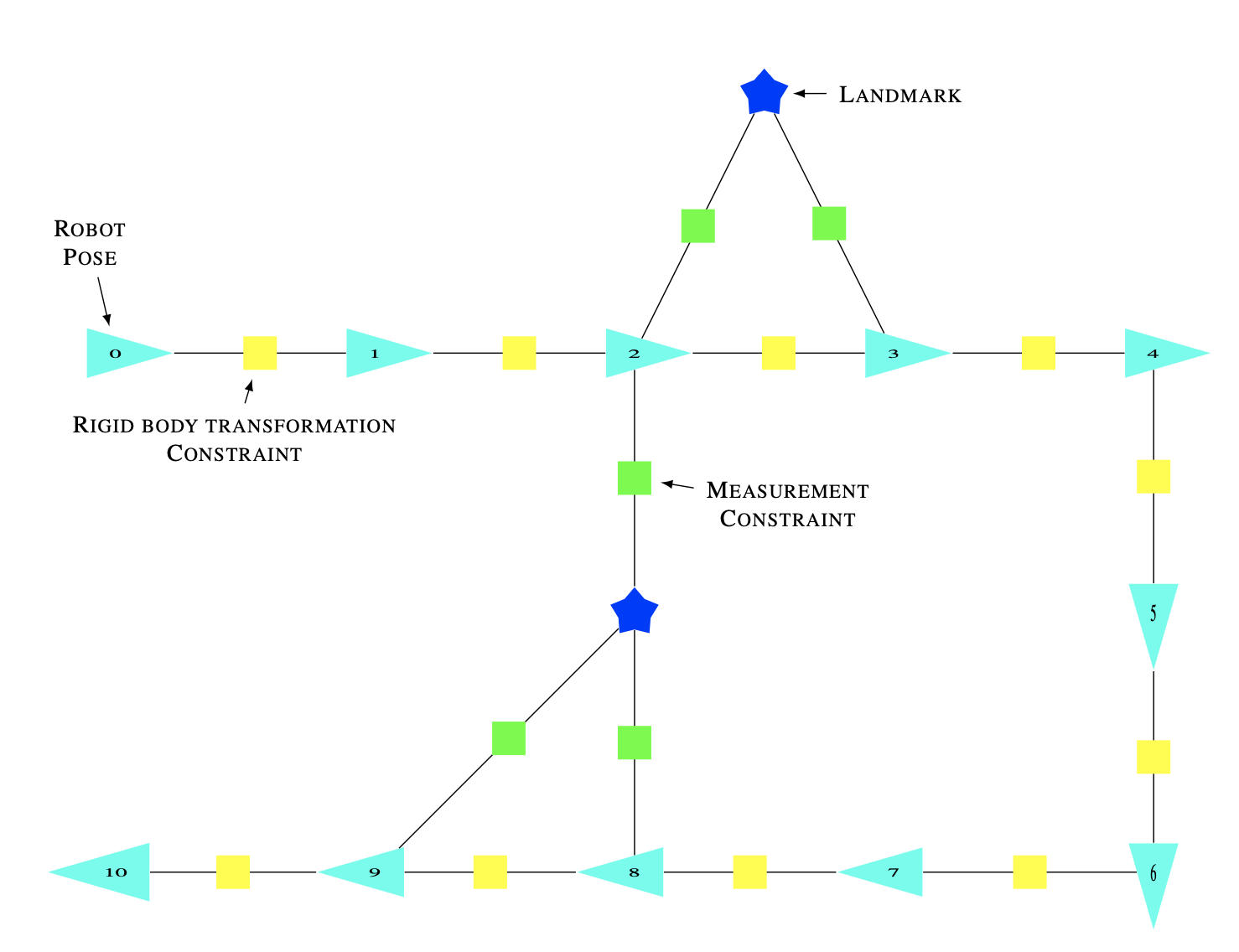

Factor Graph

Factor graph is a nice representation for optimization problems. It allow us to specify a joint density as a product of factors. It can be used to specify any function $\Phi(X)$ over a set of variables $X$, not just probability densities, though in SLAM, we normally use Gaussian distribution as the factor function.

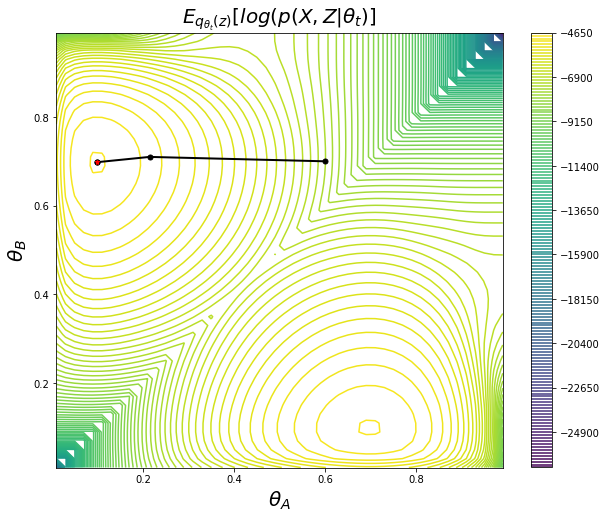

Expectation Maximization

Expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables.